Making Candlesticks in the Age of Electricity

To mentally prepare for the future of knowledge professions, look at Gen X creatives

“Every generation has its burdens. The particular plight of Gen X is to have grown up in one world only to hit middle age in a strange new land. It’s as if they were making candlesticks when electricity came in. The market value of their skills plummeted.”

Reading through this NYT article interviewing Gen Xers in creative professions and how they’ve been pushed out of the market, I couldn’t help but think that this will be the story of so many white collar / knowledge work professionals sooner than later.

In the past, industry shifts requiring folks to switch careers en masse usually happened infrequently enough that they just affected the next generation.

In the rare occasions where they happened more frequently, support was often inadequate, and the societal consequences disastrous (we are still suffering the social and political consequences of the outsourcing of manufacturing that happened decades ago, for example).

Globalisation, deregulation, and technology sped the cycle up over the past few decades.

AI (and the continuation of the previous trends) is super-charging it, even if e.g. globalisation is also retracting in some ways.

“The cruel irony is, the thing I perceived as the sellout move is in free-fall.” (Chris Wilcha, film director)

It’s tempting to point to what AI can do right now and say “it’ll never be good enough [to take my job specifically]”. But that ignores the fact that it’s not just that AI is improving over time, but that the rate of that improvement is also increasing.

It’s just like when Western countries shrugged off Covid in February 2019 saying ‘it’s just a handful of cases’, and ignoring the fact that they were rising exponentially.

Saying that AI will never take over legal, consulting, software, or executive work because it makes mistakes today misses the point - nobody [reasonable] thinks it’s today’s ChatGPT that will.

Not all fields will be impacted in the same way. Some, like software (my prediction anyway), will expand - see Jevon’s Paradox.

Others, I suspect, are bound to contract - or become hopelessly commoditised (and then probably contract, as folks who entered those fields for the payout will see the equation not worth it anymore)

Most, I wager, will change in shape: What used to be a pyramid-like structure (countless juniors, many seniors, a few execs/partners) will see themselves rebased to have a more even number across different levels.

As that happens, many of us will either decide to move laterally or do a full-on reinvention.

Maybe many Product Managers will become Product Engineers. Maybe others will become nurses, or shop owners, or something that doesn’t exist today (Holodeck Engineer, anyone?)

The so-what of all this depends on the point of view you’re looking at things from:

As a (selfish) individual: Accept that change is a constant, don’t get complacent, pick up new skills, prepare for change

As a (concerned) citizen: Lobby for fair regulation and taxation that balances incentivising innovation with protecting the losers/victims of it

As a corporate leader: Currently, far too many are playing a short-termist game of layoffs and not hiring any juniors that will backfire in the longer run

I don’t think it’s all doom and gloom. But equally, there’s a lot of misplaced optimism coming from the folks parroting the CEOs of companies like Anthropic and OpenAI (who have a huge vested interest in being seen as the paragons of progress, and AI as the solution to all of our ills).

Two things can be true:

AI will revolutionise our economies and usher a new era of productivity for humanity writ large

AI’s rapid rollout will devastate communities and individuals that aren’t able, willing, or ready to ride the wave

“That TV spot you spent six months on now becomes a TikTok execution you spend six days on.”

— Greg Paull, marketing consultant

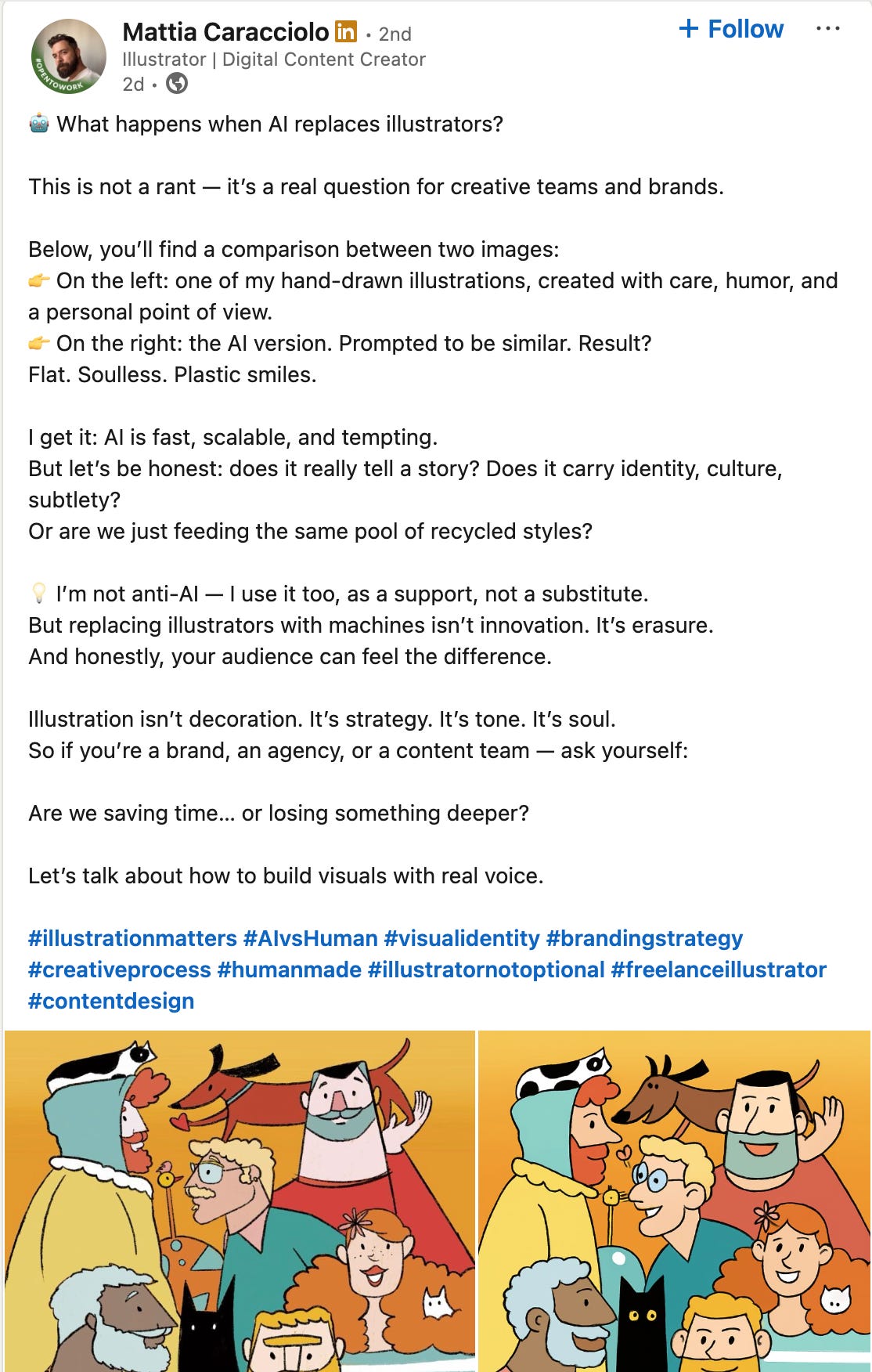

There are many in fields currently being swept away that are investing in re-skilling. Meanwhile, there’s others who are trying to embrace it, but (in my view) are still too attached to their craft. You can already see these tensions playing out in creative fields like design and illustration. Look at this post, for example - and it’s actually a fairly moderate example too:

The author says that the AI-generated image is “soulless” and unsubtle, and just carries recycled styles. My 3 main objections to this:

Not to be glib, but what does “soulless” really mean here? I personally don’t see it. I do agree with one of the comments about LLMs making all designs look the same - but if that bothers enough people sufficiently, will we let things stay so? Or is this just because we’re still in the early years of AI art?

More seriously, professionals made the same argument for soulless digital art vs. real art made with pencil or oil paint. Tron was denied an Oscar because using computers to create special effects was considered “cheating” in 1982. Photographers argued that digital photography isn’t art (2006). And so on.

For commercial designs, does ‘soul’ matter? Maybe sometimes, but certainly not always. It’s why so many companies outsource illustration - to agencies, to freelancers, to ChatGPT. It’s not a core competency or differentiator. Good enough is good enough.

Scrolling down the comments of the post above, some folks echo my thoughts, others rightly say they prefer the AI version (art and aesthetics are subjective, after all), and then there’s the folks that are totally unwilling to engage:

If your stance is “us graphic designers must condemn all uses of AI”, you won’t get very far. If you believe that LLMs are illegitimate because of the way Open AI and others got their hands on training data, or because of their high energy consumption, fair enough. But what will the condemnation serve? Will business owners be swayed to pay orders of magnitude more (and slow down)? Will your government ban ChatGPT (and also be able to enforce that ban)? Will all your colleagues side with you, or will some leap at the opportunity to beat you in the market?

Let’s also be mindful of the self-serving nature of such arguments. Would you be making the same arguments if the livelihoods being threatened were only those of software developers? Or factory workers? What if AI had developed the way we expected it to 10-20 years ago, and automated jobs like taxi and truck drivers long before knowledge workers were on the chopping board?

Of course, an argument can be self-serving and also correct. But it’s worth examining our opinions and the biases that have led to us holding them.

I’ll give you an example of my own: In the pre-LLM era, one of my superpowers and genuine differentiators against most of my peers and colleagues was the ability to translate complex technical concepts in a way that’s understandable to non-expert audiences. It was a really valuable and appreciated skill. Today, that’s fairly trivial to achieve with ChatGPT. It often manages to do it much better than me, and at a fraction of the time. I could be bitter about it (and a small part of me sure is), but that won’t serve me in any way - I now use it to accelerate my own thinking and focus on things that aren’t as commoditised to serve as my USPs.

We aren’t entitled to our craft remaining relevant in a world where it’s been commoditised by technology

To be clear, I think both the IP and energy-intensity criticisms of LLMs are very valid, and more needs to be done to address the inequalities inherent in the way LLMs have been built and are being rolled out. I worry in particular that we as a society will decide to disregard the intellectual property claims of all the artists, journalists, and authors whose works were -arguably illicitly- fed into foundation models. I doubt we’ll see a successful class action that totally redresses those groups. I’m a bit more optimistic on the energy consumption & production front, especially because that’s a technical problem more than a moral and legal one.

We aren’t entitled to our craft remaining relevant in a world where it’s been commoditised by technology - any more than a tanner, a typesetter, or a blacksmith was. Society moved on, and we accepted it. No one’s lobbying for the return of lamplighters or switchboard operators. Why would knowledge work be any more sacred?

Maybe we’ll get to a point where doing product management or UX design will be a vintage hobby, just like how there’s folks learning blacksmithing today 😆

Anyway, that was today’s ramble. I want to try and post on here more regularly again - appreciate it’s been half a year of quiet. Thanks for subscribing/reading, and if you disagree, argue with me in the comments!!

🌱 In other news

#1: Trading pints for hikes

We had some great data, AI, and product hikes in New York and Barcelona in the last month. It’s not everyone’s cup of tea, but if you want to mix things up with your events, give touching grass a go!!

#2: New Data & AI PM training cohort

I’ve just announced a new-and-improved cohort of my ROI of Data & AI programme for July. I’ve taken the feedback & my own insights from running 4 cohorts with 39 participants so far to make this one the best one yet.

You can read all about it on the course page so I won’t repeat all the info here. But I will leave a few quotes from past participants:

💬 Fortune 50 Director: “We had a GenAI proposal with no budget. By estimating the value and showing potential productivity gains, we unlocked buy-in and got it fast-tracked.”

💬 Data Engineering Consultant: “We used to go off and work in a silo for months before getting feedback […] we now catch misalignments early instead of finding out later that what we built isn’t accepted.”

💬 Head of Product: “The interactive elements of the course were great, it was beneficial to have discussions with others in similar roles across multiple sectors! Highly recommend to anyone who's working on data transformation projects/products.”

💬 AI Product Manager: “As a new AI Product Manager, this course gave me the essential tools to communicate ROI to C-level stakeholders and conduct effective discovery. It’s a must for anyone looking to level up with the right frameworks for AI product work.”

💬 Fortune 50 Sr. Manager: “[One month] after the training, I started challenging assumptions more proactively. In one case, that led to de-scoping features a market wasn’t even using - avoiding $200,000 in unnecessary delivery”

💬 Data Science Consultant: “We managed to renew the project for another year while growing the team we had because we will work in more areas. The client appreciated that I took the initiative to gain more knowledge of the project, which helped her make decisions and define solutions.”

💬 Data Product Owner: “Asking myself why we’re doing things has allowed me to recognise when work is unnecessary. It’s helped me focus on real opportunities and avoid wasted effort on things that won’t be used.”

We are already seeing the impacts of Gen AI on knowledge workers in data & tech roles, and in many areas, junior roles aren't being hired at all.

However i am also seeing positive impacts flowing through - in my teams, we are starting to use AI help speed-up many areas of data management and is giving my teams more firepower to much more with same amount of resources.

People do need to adapt e.g. with AI, data analysts and product managers will be expected to take on more technical tasks like data modelling and build of business transformations.